- What Is Annotation Project Management?

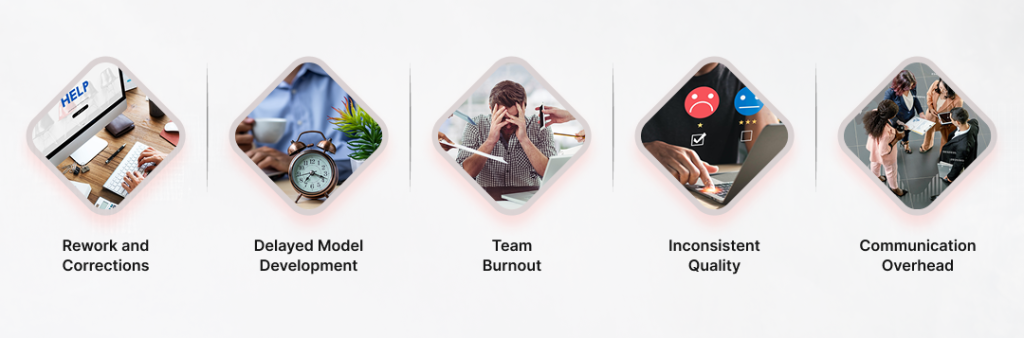

- The Hidden Costs of Poor Annotation Management

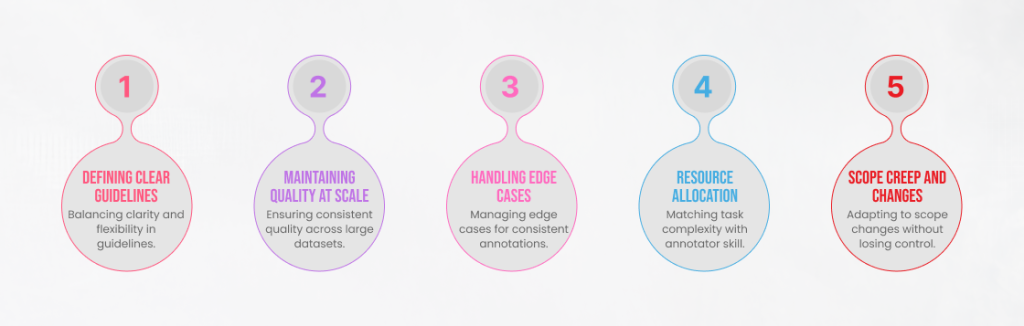

- Key Challenges in Managing Annotation Projects

- What Good Annotation Project Management Looks Like

- The GetAnnotator Approach for your projects

- Comparing Management Approaches

- When to Scale Up Project Management Support

- Choosing the Right Management Tier

- The ROI of Good Project Management

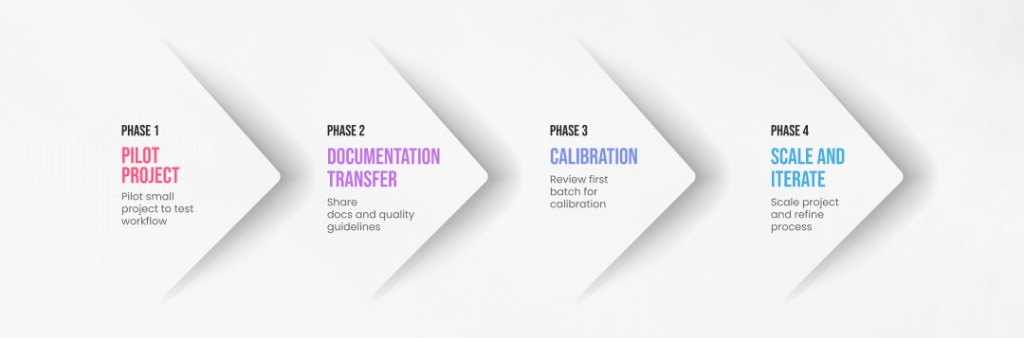

- Making the Switch to Managed Annotation

- Bottom Line: Management Makes the Difference

- Ready to Stop Managing and Start Building?

Annotation Project Management: Why It Makes or Breaks Your AI Development

You’ve got the data, the model, and a team of annotators. Six weeks later, you’re drowning in bad labels, missed deadlines, and endless clarification meetings.

Sound familiar?

Here’s the hard truth: Annotation isn’t just labeling; it’s complex project management.

Most AI teams completely underestimate this. They think it’s the easy part, and it quickly becomes the biggest bottleneck. Poor management is a silent killer for AI. It leads to wasted budgets, delayed launches, and training data so inconsistent it tanks your model’s performance.

Why is it so hard?

- Vague Guidelines: “Label all the cars” sounds easy. But what about a car on a billboard? Or one that’s 90% hidden? Without crystal-clear rules, you get chaos.

- No Real QA: You can’t just “check” 50,000 images at the end. You need a constant process to track annotator agreement and catch errors as they happen.

- Team Burnout: Your best ML engineer shouldn’t be spending 20 hours a week answering labeling questions. That’s a massive waste of talent and money.

Good management is invisible.

When it’s done right, your technical team can focus on building models, not managing spreadsheets. It means clear, shared rules for every edge case. It means proactive quality control, not last-minute panic.

You can build this entire system yourself, but it’s a full-time job. The alternative is to partner with a managed service that is provided by our platform: GetAnnotator. A fully vetted team and an experienced project manager, all for you and your project needs.

Don’t let a management problem kill your AI project.

What Is Annotation Project Management?

Before even understanding the Annotation project management. The real question is what is Project Management, beyond the universal definition? The process of planning, coordinating, executing, and quality-controlling work from the very beginning to the end of the project.

Annotation project management is not something entirely different. It just requires an additional understanding of AI/ML.

When done well, annotation project management is invisible—everything just flows smoothly. When done poorly, it becomes the bottleneck that delays your entire AI project.

The Hidden Costs of Poor Annotation Management

Most teams don’t realize how expensive bad annotation management really is until they calculate the actual costs.

- Rework and Corrections. When annotation guidelines aren’t clear or quality control is weak, you end up with inconsistent data. Fixing this means going back and re-annotating large portions of your dataset.

One AI startup we spoke with had to re-annotate 40% of its training data because initial guidelines were too vague. That’s almost half their annotation budget wasted.

- Delayed Model Development: Your ML engineers can’t start training until they have quality data. Every week that annotation delays push out model development is a week your product isn’t getting better.

For startups trying to hit investor milestones or enterprises launching new features, these delays have real business consequences.

- Team Burnout: When someone on your technical team has to manage annotators on top of their actual job, something suffers. Usually, it’s both things.

Your senior ML engineer shouldn’t be spending 15 hours a week answering annotator questions and reviewing edge cases. That’s $2,000+ per week in opportunity cost for work that could be handled by a dedicated project manager.

- Inconsistent Quality Without proper management, different annotators interpret guidelines differently. This creates noisy training data that hurts model performance.

You might have 100,000 labeled images, but if 30% are labeled inconsistently, your model learns the wrong patterns. That’s not a data problem—that’s a management problem.

- Communication Overhead When annotation management is disorganized, simple questions become hour-long email chains. Decisions get lost. Context disappears between conversations.

All of this creates friction that slows everything down.

Key Challenges in Managing Annotation Projects

Let’s break down the specific challenges that make annotation project management so difficult:

Challenge 1: Defining Clear Guidelines.

Writing annotation guidelines sounds simple. It’s not.

Guidelines need to be detailed enough to ensure consistency but flexible enough to handle edge cases. They need to be understandable to annotators without deep technical knowledge but rigorous enough to produce ML-grade quality.

Most teams start with guidelines that are either too vague (“label all the cars”) or too rigid (50 pages of specifications that nobody reads). Finding the right balance takes experience.

Challenge 2: Maintaining Quality at Scale.

Quality control is straightforward when you have one annotator working on 100 images. What about when you have 10 annotators working on 50,000 images across multiple data types?

You need systematic QA processes, inter-annotator agreement tracking, and the ability to identify when quality is slipping before you’ve wasted weeks of work.

Challenge 3: Handling Edge Cases.

Real-world data is messy. There will always be examples that don’t fit neatly into your categories.

Is that a truck or a van? Does this sentence express positive or negative sentiment? How do you label partially visible objects?

Someone needs to make consistent decisions on these edge cases and communicate those decisions to the entire annotation team. Without good management, different annotators make different calls, and your data becomes inconsistent.

Challenge 4: Resource Allocation.

Not all annotation tasks require the same skill level. Simple bounding boxes can be done by less experienced annotators. Medical image segmentation needs specialists.

Poor project management wastes your expert annotators on simple tasks while putting inexperienced people on complex work. Both scenarios hurt efficiency and quality.

Challenge 5: Scope Creep and Changes.

Your annotation requirements will change as your project evolves. Maybe you realize you need additional labels, or your model is struggling with certain edge cases that need more training data.

Managing these changes without derailing the entire project requires careful coordination and clear communication.

What Good Annotation Project Management Looks Like

So what does it look like when annotation project management is done right?

- Clear Communication; Annotators know exactly what’s expected. Guidelines are clear and accessible. There’s a defined process for asking questions and getting answers quickly. Edge cases are documented and shared across the team so everyone stays consistent.

- Proactive Quality Control: Quality isn’t checked at the end—it’s monitored continuously. Potential issues are caught early when they’re cheap to fix.

- Inter-annotator agreement is tracked. Outliers are identified and addressed. Regular calibration sessions keep everyone aligned.

- Realistic Planning: Timelines account for the actual complexity of annotation work. There’s a buffer for revisions and edge cases. Resources are allocated based on task difficulty.

- Nobody’s scrambling at the last minute because scope creep wasn’t anticipated.

- Transparent Progress Tracking: Stakeholders can see project status in real-time. There are no surprises about whether you’ll hit your deadline.

- Bottlenecks are visible immediately, allowing for quick adjustments.

- Efficient Team Utilization: The right people are working on the right tasks. Expert annotators focus on complex work. Routine tasks are handled by skilled but less specialized team members.

Everyone’s time is used efficiently.

The GetAnnotator Approach for your projects

This is where our platforms like GetAnnotator fundamentally change the game.

Most annotation projects fail not because of technical complexity but because of management complexity. GetAnnotator removes that burden entirely.

Built-in Project Management: With GetAnnotator’s Advanced and Expert tiers, you get a dedicated project manager who handles all the coordination, communication, and quality control.

You’re not managing annotators—you’re collaborating with a project manager who’s done this hundreds of times before.

Pre-Vetted Teams: The annotators aren’t random freelancers you need to train from scratch. They’re professionals from a pool of 200+ vetted specialists who already understand annotation best practices.

This means less onboarding time and fewer quality issues from the start.

Real-Time Dashboards: No more wondering where your project stands. GetAnnotator provides live dashboards showing progress, quality metrics, and timeline projections.

You can spot problems early and make informed decisions about resource allocation.

Integrated Communication: Built-in chat means questions get answered quickly without email chains or Slack threads that lose context.

Everything related to your project stays in one place, making it easy to track decisions and maintain consistency.

Quality Assurance Infrastructure: Quality control isn’t an afterthought. It’s built into the workflow with systematic review processes, accuracy tracking, and continuous feedback loops.

Flexible Scaling: Need to ramp up for a deadline? Scale back when a phase completes? GetAnnotator’s project management accommodates these changes without the HR headaches of hiring and firing.

Comparing Management Approaches

Let’s look at how different approaches to annotation project management compare:

DIY In-House Management: You hire annotators and manage them yourself.

- Pros: Full control, direct communication

- Cons: Massive time investment, steep learning curve, ongoing overhead

- Best for: Companies with dedicated annotation teams and experienced managers

Freelance Platform Management: You coordinate multiple freelancers through platforms like Upwork.

- Pros: Flexibility, potentially lower cost

- Cons: High coordination burden, quality variability, no built-in QA

- Best for: Very small projects with simple requirements

Traditional Annotation Services: Large BPO-style annotation companies.

- Pros: Handle large volumes, established processes

- Cons: Slow to start, rigid, expensive, black-box quality control

- Best for: Enterprise-scale projects with very large budgets

GetAnnotator Managed Teams Subscription-based with built-in project management.

- Pros: Fast start (24 hours), experienced management, transparent quality control, flexible scaling

- Cons: Requires a monthly commitment

- Best for: AI teams who want quality results without management overhead

The right choice depends on your specific situation, but for most AI teams, the managed approach offers the best balance of quality, speed, and efficiency.

When to Scale Up Project Management Support

How do you know when you need more robust annotation project management?

Signs you need better management:

- You’re spending more than 10 hours/week on annotation coordination

- Quality issues are discovered late in the project

- Deadlines are consistently missed

- Different annotators are producing inconsistent results

- Edge case decisions aren’t being documented

- Your technical team is complaining about annotation delays

- Rework and corrections are eating up 20%+ of your budget

If you’re experiencing multiple items from this list, it’s time to either level up your internal management capabilities or partner with a managed service like ours from GetAnnotator.

Choosing the Right Management Tier

GetAnnotator offers three tiers with different levels of project management support:

Skilled Plan ($499/month): Best if you can handle coordination internally and just need quality annotators.

- You manage task assignment and quality control

- Direct communication with your annotator

- Good for straightforward projects with clear requirements

Advanced Plan ($649/month) The sweet spot for most teams—includes a project manager.

- The project manager handles coordination and quality control

- Daily reporting and progress tracking

- Enhanced workflow support

- Perfect when you want annotation off your plate

Expert Plan ($899/month) For complex, high-stakes projects requiring sophisticated management.

- Dedicated project manager with deep domain expertise

- Priority support and SLA guarantees

- Real-time dashboard access

- Best for regulated industries or complex data types

Most AI teams find the Advanced tier offers the right balance—enough management support to remove the burden from their technical team without the premium cost of the Expert tier.

The ROI of Good Project Management

Let’s talk numbers. What’s the actual return on investment for good annotation project management?

Time Savings: If your ML engineer makes $150K/year, that’s roughly $75/hour. If good project management saves them 10 hours per week, that’s $750/week or $3,000/month in recovered productivity.

The Advanced plan costs $649/month and saves you $3,000/month in engineering time. That’s 4.6x ROI before considering any other benefits.

Quality Improvement: Better project management typically improves annotation quality by 15-30%. Higher quality training data means better model performance, which translates to better product outcomes.

For a computer vision product, even a 5% improvement in accuracy can be the difference between a product that works and one that doesn’t.

Faster Time to Market: Good project management accelerates annotation timelines by 30-50% compared to poorly managed projects.

For a startup, shipping your product 2 months earlier could mean the difference between making or missing your funding runway.

Reduced Rework: Poor management leads to 20-40% rework rates. Good management keeps this under 10%.

On a $50,000 annotation project, that’s potentially $15,000 saved in avoided rework.

When you add it all up, investing in good annotation project management isn’t a cost—its a massive cost saver.

Making the Switch to Managed Annotation

If you’re currently managing annotation yourself and considering a switch to a managed service like GetAnnotator, here’s how to make the transition smooth:

- Phase 1: Pilot Project Start with a small project to test the platform and working relationship. This lets you evaluate quality and communication without committing your entire annotation pipeline.

- Phase 2: Documentation Transfer Share your existing guidelines, edge case documentation, and quality standards. Good project managers will adapt these to their workflow while maintaining your requirements.

- Phase 3: Calibration. The first batch of annotations should go through extra careful review to ensure the new team understands your standards. This calibration period is normal.

- Phase 4: Scale and Iterate. Once quality and workflow are validated, scale up to your full project. Continue providing feedback to refine the process.

Most teams find they can transition successfully within 1-2 weeks.

Bottom Line: Management Makes the Difference

Here’s what it comes down to: the difference between annotation projects that succeed and those that struggle isn’t usually the difficulty of the annotation task itself. It’s the quality of project management.

You can have the best annotators in the world, but without proper management, you’ll still end up with delays, quality issues, and wasted budget.

You can have clear guidelines and sophisticated QA processes, but if nobody’s coordinating the work and making decisions on edge cases, the project will drift off course.

Good annotation project management is the invisible infrastructure that makes everything else work.

For most AI teams, the question isn’t whether to invest in good project management—it’s whether to build that capability in-house or partner with specialists who’ve already solved these problems.

Platforms like GetAnnotator exist specifically to remove the project management burden from AI teams. You get experienced project managers, established workflows, quality control infrastructure, and transparent tracking—all without hiring, training, or managing these capabilities yourself.

Ready to Stop Managing and Start Building?

If you’re tired of annotation project management consuming your team’s time and energy, there’s a better way.

GetAnnotator delivers pre-vetted annotation teams with built-in project management. Your project starts in 24 hours. Progress tracking is real-time. Quality control is systematic. And your technical team can focus on what they’re actually good at—building AI.

No recruitment hassles. No coordination overhead and No quality surprises.

Just professional annotation project management that works, so you can focus on building better models.

Visit getannotator.com to see how managed annotation teams can accelerate your AI development—or reach out to discuss which management tier fits your project needs.

Your next breakthrough shouldn’t be delayed by annotation logistics. Let’s fix that.

Related Blogs

December 25, 2025

Hire Image Annotators Online: The Secret to Faster AI Development

Artificial Intelligence is hungry. It has an insatiable appetite for data—specifically, high-quality, labeled data. Whether you’re training a self-driving car to recognize pedestrians or a medical AI to detect tumors in X-rays, the fuel is the same: perfectly annotated images. But here is the bottleneck: annotation is tedious, time-consuming, and requires a level of precision […]

Read More

December 23, 2025

Hire Remote Annotators: Scale Your AI Data Without the Overhead

Artificial Intelligence models are only as smart as the data they are fed. You can have the most sophisticated algorithms and the brightest data scientists, but if your training data is messy, unlabeled, or inaccurate, your model will fail. This reality has created a massive bottleneck for AI companies: the need for high-quality, human-annotated data […]

Read More

December 18, 2025

Annotation as a Service: The Smart Way to Scale AI Training Data

Building a robust artificial intelligence model is rarely about the complexity of the code anymore. In the current landscape of machine learning, the true bottleneck is data. Specifically, high-quality, accurately labeled data. Whether you are training a computer vision model to detect defects in manufacturing or fine-tuning a Large Language Model (LLM) for customer support, […]

Read More

December 17, 2025

Scaling AI? How to Build a Flexible Annotator Team Fast

The artificial intelligence race is no longer just about who has the best algorithm; it is about who has the best data. While data scientists and engineers focus on refining models, the unsung heroes of the AI revolution are often the people labeling the data: the annotators. However, as projects move from the pilot phase […]

Read More

Previous Blog

Previous Blog